Center for Extreme Events Research activities are built upon:

Unique Testing Facilities

Unrivaled Computational Expertise

- Meshfree method

- Modal analysis and physical modeling

- Isogeometric analysis

- Data-driven reduced-order modeling

- Multifidelity uncertainty quantification (UQ)

Combined Experimental and Computational Technologies

UNIQUE TESTING FACILITIES

UC San Diego is home to a number of experimental testing facilities that are not found anywhere else in the nation. These facilities enable us to perform sub-scale to near-full-scale experimental investigation of damage mechanisms at coupon, component, and system levels — for a thorough understanding of damage initiation, propagation, and total collapse. Facilities include the following:

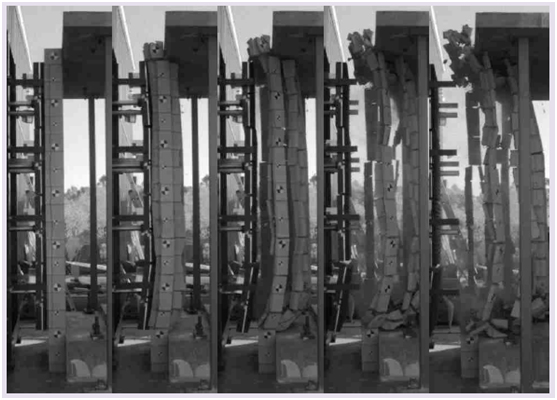

Extreme events simulator

Often referred to as the “blast simulator,” this unique testing facility can be used to develop better methods for designing and retrofitting buildings and structures to withstand a wide range of extreme events. The simulator utilizes hydraulic/high pressure nitrogen based actuators to generate mechanical loads that simulate real blasts up to 12,000 psi-msecs. The individual actuators can be configured to mimic both blast and impact loads of various extreme events. Because there is no fireball, the actual response of the structure to blast load can be seen and recorded with high speed (5,000-10,000 frames per second) Phantom cameras.

Unique attributes of the extreme events simulator

- Six blast generators

- Wide range of simulated blast and impact load parameters

- Large number of possible configurations for test set up

- No fireball, no smoke

- “Progressive failure” from beginning to end can be seen during testing

Gas Gun Facilities

UC San Diego’s gas gun facilities enable world-class projectile impact and penetration research focused on the impact effects on composite materials and aerospace structures.

Specifications

- 79 mm gas gun (250 meters/second)

- 25 mm gas gun (over 1000 meters/second)

- Split-Hopkinson pressure bar for measuring pressure wave propagation under various impact loading conditions

Multifunctional Materials

The ARMOR Lab (http://armor.ucsd.edu/) at UC San Diego utilizes nano-engineering principles, scalable techniques, and field-deployable methods to design multifunctional materials and new sensor technologies. First, materials design and manufacturing is achieved using a diverse suite of wet chemistry, materials processing, and nanocomposite fabrication tools. The lab is equipped with a fume hood, spin-coater, robotic spray fabrication tool, vacuum ovens, ultrasonicators, and homogenizers. Second, characterization of new materials and technologies are performed using various analog/digital electronic (e.g., oscilloscopes, multimeters, generators, impedance analyzer, data acquisition systems, and source measurement unit) and mechanical load frames. Lastly, validation and testing of new technologies utilize the extensive collection of sensing transducers and scaled test structures.

UNRIVALED COMPUTATIONAL EXPERTISE

We assess and optimize safety and resilience for structures subjected to extreme events through simulation-based prediction and optimization. Our unique computational capabilities are effective for modeling phenomena that commonly exist in extreme events such as shock dynamics, material damages and fragmentation. Our expertise spans the following areas:

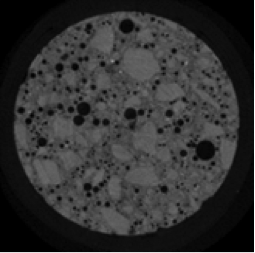

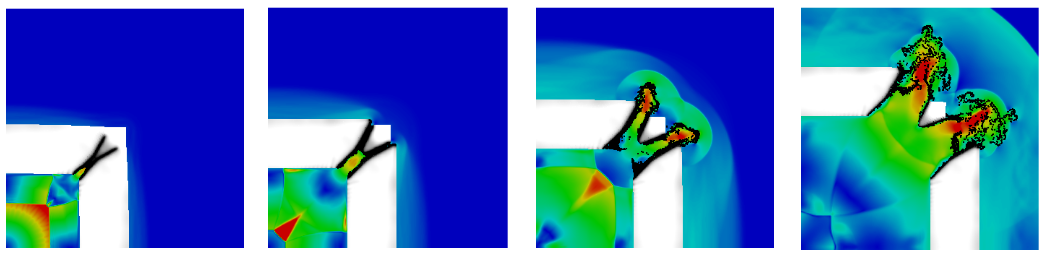

Meshfree Method

The meshfree method is a new paradigm for computational mechanics which allows simulation models to be constructed entirely by using a scattered set of points (as opposed to conventional mesh-based computational methods). The meshfree method offers new opportunities in simulation-based science and engineering for dealing with problems where reliable solutions cannot be obtained by the conventional mesh-based methods. Examples related to extreme events include large deformations, crack propagation, high velocity contact-impact, fragmentation and penetration.

The meshfree method also offers an effective means of modeling biomaterials where the pixels in the medical images can be used directly as the simulation model.

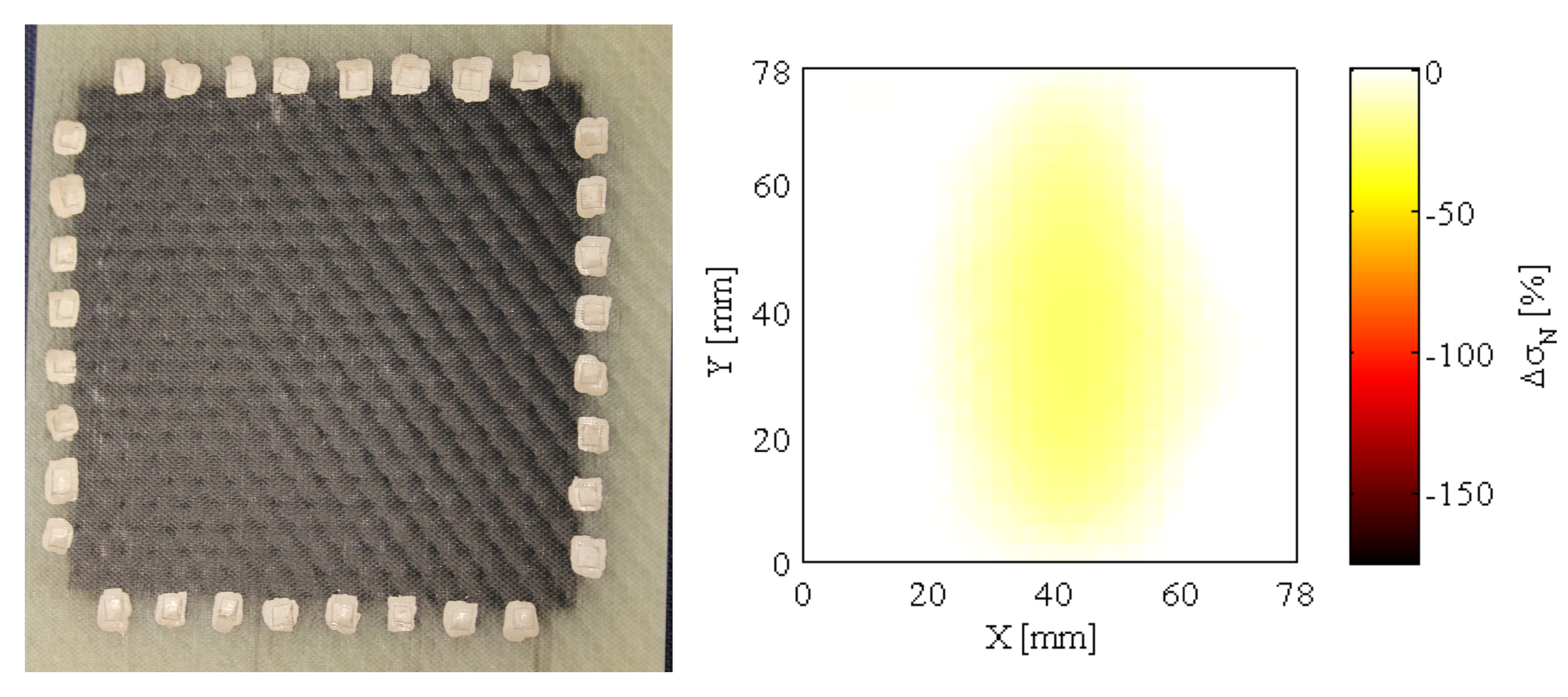

Sample of concrete microstructure and meshfree modeling of micro-crack propagation in concrete subjected to shear deformation.

Research example: Bullet penetration into a concrete panel

Research example: RKPM modeling of landslide under San Fernando earthquake

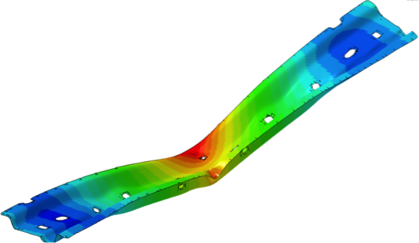

Modal analysis and physical modeling

Extreme events in fluid flows like rogue waves in the ocean, a variety of extreme weather phenomena, cavitation inception, turbulent bursts, and relaminarization, to name only a few, play a crucial role in aerospace and nautical engineering. To predict such events, the Computational Modeling and Flow Physics Group at UC San Diego works on statistical methods that identify precursors of such events from large data. In particular, our group has developed a conditional space-time proper orthogonal decomposition (POD) formulation that is tailored to the eduction of rare or intermittent events. By construction, the resulting spatio-temporal modes are coherent in space and over a finite time horizon, and optimally capture the energy of the flow. For the example of intermittent acoustic radiation from a turbulent jet shown in the video, we extract the statistically loudest event from high-fidelity simulation data. The method identifies the statistically most significant ‘prototype’ burst event and tracks its evolution over time. After the prototype event is extracted from data, we explore its physical origin to devise predictive theoretical models.

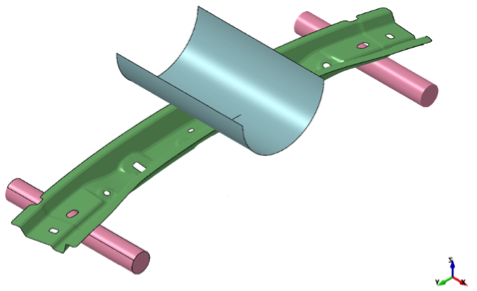

Isogeometric Analysis

Isogeometric analysis is a recently developed computational approach that offers the possibility of integrating finite element analysis (FEA) into conventional NURBS-based CAD design tools. Currently, it is necessary to convert data between CAD and FEA packages to analyze new designs during development — a difficult task since the computational geometric approach for each is different. Isogeometric analysis employs complex NURBS geometry (the basis of most CAD packages) in the FEA application directly. This allows models to be designed, tested and adjusted in one go, using a common data set.

To facilitate technology transfer to the center's partners, some isogeometric capabilities are developed using the open-source library tIGAr.

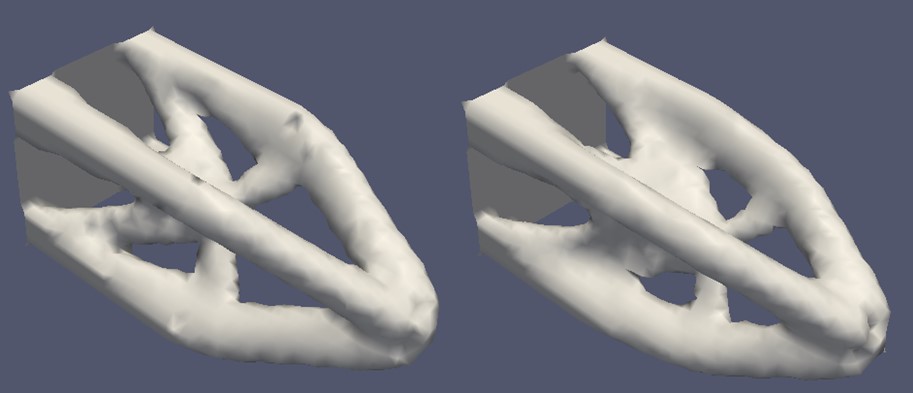

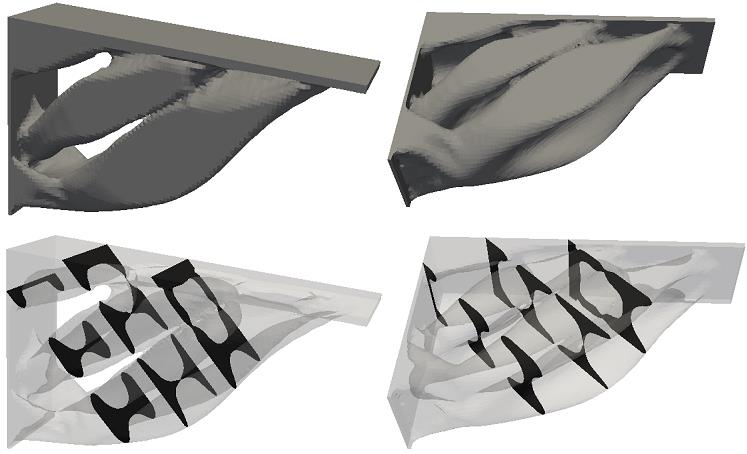

Immersed methods

The development of complicated multiphysics analysis models can be accelerated significantly by numerical techniques that don't require the computational models of sub-problems to match up at interfaces. We collectively refer to these techniques as "immersed methods". There is particular synergy between immersed and isogeometric methods—the combination of which is sometimes called "immersogeometric analysis"—due to the non-watertight and/or boundary-only representations of geometry in CAD. Researchers at the center have successfully combined meshfree, isogeometric, and more traditional computational models via immersed methods to carry out complicated coupled analyses ranging from fluid–structure interaction of medical devices to fragmentation of structures subjected to blast waves.

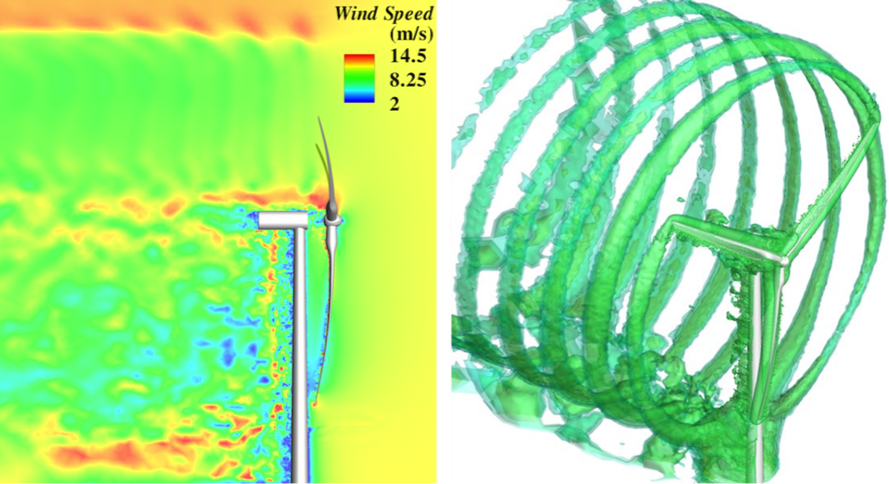

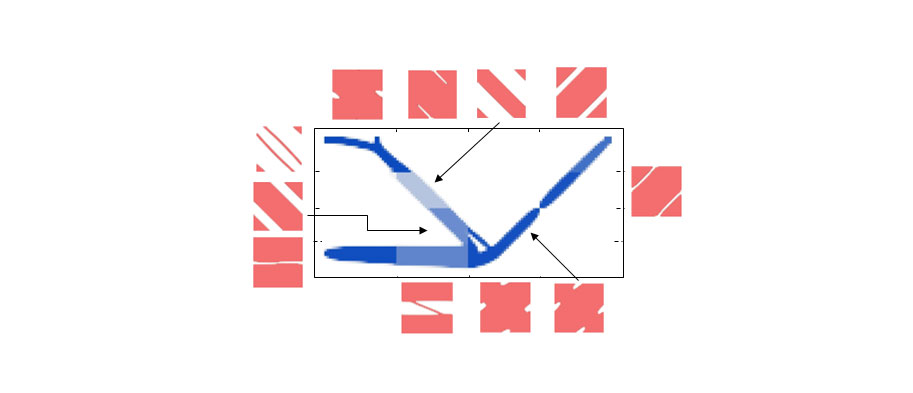

Data-driven reduced-order modeling

Reduced-order modeling (ROM) is a mathematically sound framework to build computationally efficient surrogate models for large-scale systems. As a special case, data-driven reduced-order modeling becomes increasingly relevant due to 1) the vast availability of data, 2) hard-to-access legacy computer codes that make intrusive methods challenging, and 3) the monumental challenge of modeling complex, coupled, multiphysics, multiscale systems. Research in data-driven, physics-based ROMs focuses on using data together with physics-based models. Our research in data-driven (e.g., Eigensystem Realization Algorithm, Dynamic Mode Decomposition, Operator Inference), as well as physics-based model reduction (Galerkin proper orthogonal decomposition; balanced truncation), has led to tremendous speed-ups in modeling complex fluid flows and combustion applications.

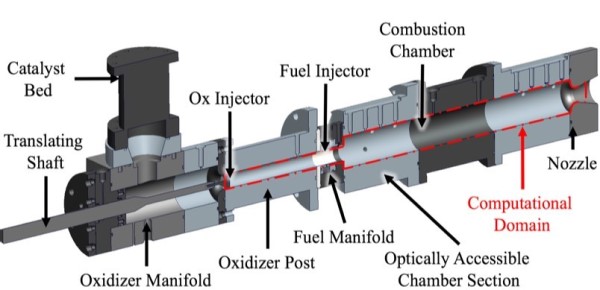

Model combustor setup

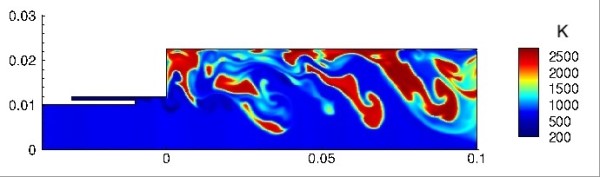

True Temperature from high-fidelity model

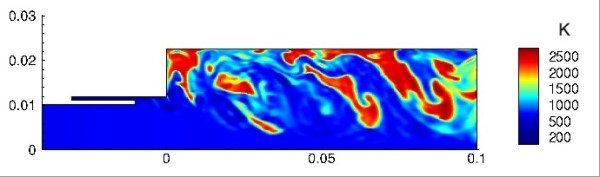

ROM-predicted temperature with ROM (simulation time speedup of five orders of magnitude)

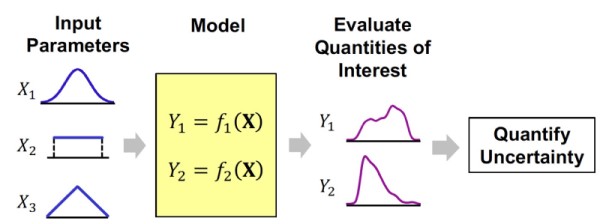

Multifidelity uncertainty quantification (UQ)

Quantifying the uncertainty in model outcomes given uncertainty model input parameters is challenging when complex systems are involved. Computing statistical information (mean, variance, failure probabilities, risk measures) from quantities of interest often requires a large number of model evaluations. Our multifidelity uncertainty quantification (UQ) approach leverages information from surrogate models of varying fidelity and computational cost to efficiently solve the UQ task at hand. Such methods are attractive as they move most of the computational work to lower fidelity models and decrease the number of expensive high-fidelity model evaluations. Research in this area includes ROMs in UQ/robust optimization/reliability-based design to reduce the computational cost while providing statistical guarantees for the overall method.

COMBINED EXPERIMENTAL AND COMPUTATIONAL TECHNOLOGIES

The Center works at the cutting-edge of what is possible by combining the most advanced experimental and computational technologies for extreme events research. These assets are an important part of threat estimation and damage mitigation. The large-scale computational simulations are validated by our extreme events simulator and impact-testing facilities. The validated computational capabilities are then fully integrated to provide fast damage assessment of structures in recovery efforts.

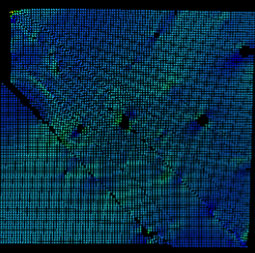

Computational Software

Center faculty develop stable and effective computational software based on modern methods in computational mechanics (i.e., meshfree and isogeometric analysis techniques) and numerical algorithms for combined Lagrangian and Eulerian multi-scale modeling of blast effects and shock dynamics, sub-scale and near-full-scale experimental investigation of damage mechanisms at the component and system levels, and multi-scale verification and validation for a thorough understanding of damage initiation, propagation, and total collapse.

Topology optimization

Topology optimization is the most generic form of structural optimization offering the greatest benefits and can produce creative and revolutionary design solutions. It is fundamentally based on the finite element method to carry out sensitivity analysis. This drives the optimizer to determine the optimum design solution. The research at UC San Diego utilizes the level set method and focuses on developing coupled multiscale and multiphysics topology optimization for materials and structures. For further information, visit the M2DO lab website (here).

Spatial Damage Characterization

The integrity and performance of structural systems depend on monitoring surface and sub-surface material damage throughout their operational service lifetimes. A core focus of the ARMOR Lab (http://armor.ucsd.edu/) is to nano-engineer multifunctional material systems that are endowed with properties intrinsically sensitive to damage and, when coupled with tomographic algorithms, be able to identify the types, severities, and locations of damage in situ and nondestructively. Multifunctional materials in the form of spray-coated structural coatings, fiber-reinforced polymer (FRP) composites, self-sensing cement composites, and wearable fabrics have been validated for spatial strain, cracking, corrosion, pH, temperature, and pressure sensing.